The fine folks at Torrent Freak have been all puffed up with righteous indignation that companies like Comcast have taken to undermining their file-swarming activities. They’re active participants in the inevitable arms race that followed: users try to maximize their throughput, the ISP tries to minimize congestion, users bypass the ISP’s mechanism, ISP implements new mechanism, etc. That’s fine. It’s the invisible hand of the market and all that good stuff. Friday, one of their contributors took the high road and posted a list of proposed solutions to the Bittorrent problem. A heavily-snipped summary:

- Ask for voluntary cooperation.

- Keep connections within the providers network.

- Usage based quotas.

- Limit the total connections allowed at one time per user.

- Build out networks to handle the increased load and pass the cost onto the consumer.

- Cancel the service of users who abuse their privileges.

The author has the presence of mind to present downsides for each of these, but missed the major downside for each. I suspect that Torrent Freak as a whole is blinded by its own bias. People expect to get whatever it was they felt they paid for, and if you tell them that there is some limitation on what they’re paying for and your competitor doesn’t tell you (or denies the existence of the limitation), you lose business. tl;dr follows:

In asking for voluntary cooperation, imposing set quotas, and canceling abusive customers, there is an implied step of the ISP making public some limitation on the capacity of the network. Every ISP has limits to the amount of throughput and number of packets it can handle at any given time between two points, whether it’s an internal transaction or otherwise. The series of tubes is only so big. It is not, however, common practice for ISPs to publicize what their capacities are. My employer is a bit of an exception to this, with our CEO rather frequently making reference to the size of our backhauls from AT&T’s ATM cloud, as well as the nature of our internal cross-connects between Santa Rosa, San Francisco, and San Jose. But that’s very unusual. You cannot reasonably expect Comcast or Verizon or Charter or AT&T to pipe up about their transit situation. Doing so unilaterally opens you up to the possibility of some rather damaging PR attacks. What would happen in AT&T ran an ad campaign touting what the actual per-customer available bandwidth were on Comcast’s network? Pandemonium, at least among Comcast’s shareholders. So Comcast and other ISPs must look after themselves and not take that step.

If the limitations of the network are not spelled out, then we already know what happens. Accusations of glass-ceiling secret usage quotas will fly about. People will publish copies of their disconnect notices, warnings, or requests to scale back usage. Again the public trust in the speed and reliability of the network is undermined to the detriment of the stock value. Not a good option.

Billing for usage, unilaterally, is a non-starter in the market. If I charge you per *mumble*byte (kilo, mega, giga, tera, whatever), I’m setting myself up for disaster. What happens when some script kiddy gets upset about how you pwned him in Team Fortress and decides to ping flood your connection? Would you be charged for virus activity? Some guy that cracked your WEP from the parking lot to download prons? Nothing can undermine your relationship with a customer faster than billing him more than he expected, even if your system keeps track of all the little tiny charges properly.

Keeping connections within the network makes a lot more sense, but has a big-brother aura about it that strikes a nerve these days. What with the NSA already hiding in your wall jacks, retroactive immunity for malfeasant ILECs, and Google tracking every webpage you click through to with their absurdly long-lived cookies and ubiquitous Adsense system, spending tons of money for specialized equipment that reads and presumably tracks customer transaction behavior is an invitation to public outcry and legal action. Not pretty.

Limiting connections to one per user is just silly. Any mechanism for doing so that didn’t completely break old workhorse protocols like NNTP or FTP would be so trivial to bypass as to be laughable or turn out to be exactly what the Torrent Freak article was suggesting alternatives to. C’mon, folks.

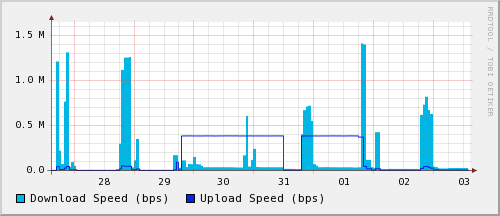

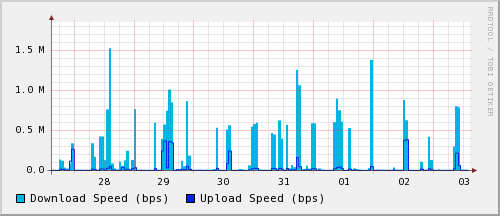

Building out the network to simply be able to take the load is probably the best available option, but is essentially just surrendering to a handful of bandwidth hogs that are likely downloading tons of stuff they never even use (mirroring newsgroups is my favorite overuse of bandwidth). When dealing with thousands of customers with multiple-mbps connections, ISPs have always relied on a bit of statistical optimism. Most people don’t use most of their capacity most of the time. If you take a random time of day and pick 100 random DSL customers, together they might be using twice the total bandwidth allotment for one of them. That’s just the traffic pattern. There’s variation, of course, but that’s what concepts like Weighted Fair Queueing are for.

I am not sure what is considered abuse, but limitation is really stupid. If you pay for lets say a 3 mgb pipe, that is what you should have access to the WHOLE time. I don’t care what the bandwidth is used for.

Ah, but aggregated over thousands of users, a 3mbps uplink is pretty cheap — assuming that people use the connection like normal people — so you can offer it at a substantially lower price than an actual dedicated link. Most broadband subscribers use significantly less than 5% of their maximum throughput, averaged out over the course of a given month. This means you could get 200 such customers onto 36mbps of actual capacity without really having to sweat the congestion too much. In practice you’d want to keep an eye on the actual peaks as you provision customers onto that backhaul, but these are some made-up numbers.

Now, with a 20:1 ratio of sold bandwidth to available bandwidth, your overhead is pretty low. Now pile eight guys onto that same backhaul with a penchant for running Bittorrent full-tilt 24/7. They’ll tie up 24mbps of that pipe, leaving the other 192 customers with 8mbps of spare bandwidth. The ISP has to get a bigger pipe to avoid congestion. Cost to the ISP goes up, so the price for everybody’s got to go up.

Compare the price of an OC3 to your monthly connectivity bill. Then ask yourself if you’re subsidizing your neighbor or if it’s the other way around. I know what the answer is if you’ve been pulling down packets as fast as you can. Are you really paying for that 3mbps connection?

Everything works better when we all try to play nice.